Whenever we hear the words, “quality” and “quantity,” we all deduce the inherent trade-off existing between them. This holds true even for volume of the data and quality of the data and its imminent concern comes up in most discussions. Since most of us have molded to the thought, we can’t eradicate the petabytes of data without acknowledging the inconsistent, redundant, inaccurate, unconfirmed junk data, Apache Hadoop cluster, parallel data warehouse etc. This blog talks about the oversimplification of this notion and the actual truth behind the whole quality-quantity issue.

Big Data is not the reason for most data problems

What we need to acknowledge is the fact that most of the “quality” issues arise from the source transactional systems. These may include the customer management system (CRM), general ledgers etc. that can generate more data within the terabyte vicinity. It is the responsibility of the IT administrator to keep the system piqued, cleansed, current and consistent. The issue can be fixed downstream by merging, averaging, matching and cleansing in the intermediary stages however, it is important to appreciate adequate controls at the source. Inadequacy to cleanse the system penalizes the quality and volume remains an ineffective factor.

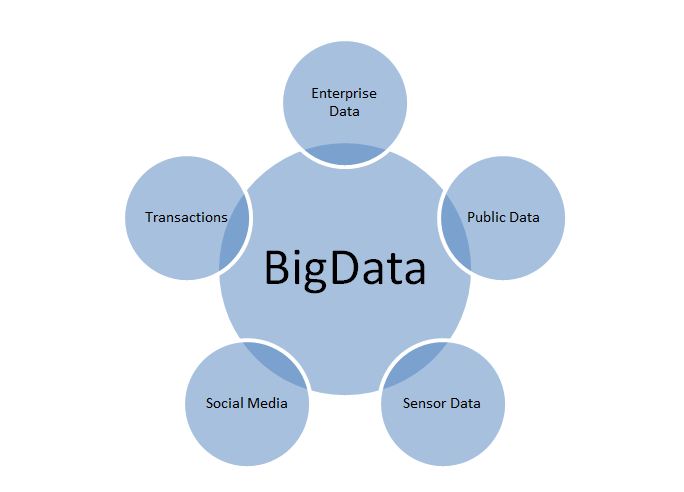

Big Data combines data sources that administrators failed to cleanse

In data warehouse systems, the data quality is concerned with the maintenance of core records like customers, finance, human resource etc. Big Data initiatives have been syndicating social marketing intelligence, data from external informational silos, real time data sensor feeds, IT system logs etc. Most of these sources have not been linked to the official reference data from transactional systems. Most of these sources are not cleansed as most of the data were approached in isolation. The teams viewing the data worked through these issues and did not feed them to the official records. The dynamics changed with cross-information type analytics, a common in the big data space.

Assembling of these individual bits of data together can be useful than viewing them in isolation. Contexts to what have happened and why it happened is provided. The new source of the data is not fed to the enterprise warehouse, or archived offline or kept for e-discovery. The data administrator aligns distilled key patterns, root causes and trends to purge the system once it has fulfilled its core purpose.

It is important to understand the how the data quality matters. Firstly, all sources, actors and participants need to be aware of their lineage and remain accessible, visible, and discoverable. John McPherson, IBM Research colleague said that when anybody refers to big data, it is referring to using data, which hasn’t been exploited in the past. The aim here is not figuring out the profitability of the store but what is contributing to its profitability and growth.

With Big Data, find quality-related issues in source data

When anybody is assembling the data sets in Apache Hadoop cluster that previously did not cohabite the same database, or try to build common viewing platform across them will be shocked at the quality issues. These quality issues are most commonly found in the sources or data sets that have been underutilized.

A nest of nasty discoveries, it is best to expect the unexpected. What one can do is either go back to the inception of data generation or deal past the quality problems. Since these underutilized data is being exploited rigorously, there is bound to be nerve-wracking quality problems. The bar of the complexity surges as we try to combine the structured data with fresh unstructured sources. This unstructured information (the new source of Big Data) is inconsistent, and noisy. The non-transactional data is unreliable, unpredictable and raucous at the same time. It is good thing to establish best practices and shared methods before meting this data through the single platform.

Big Data can have more quality issues as data volume is greater

Big Data is more volume, more variety, and more velocity. This raises the doubt about “quality.” Data cleanup becomes involves more effort, but according to Tom Deutsch, this is exactly where the Big Data platform excels. More conditioned to resolve data quality issues, the Big Data has long bedeviled analysts. It is the traditional requirement to create models on the training samples than on entire data set records. Most of the time the idea was appreciated but overlooked. The scalability restraints are forcing the modelers to sacrifice granularity in data sets and speed the model building, scoring and execution. Organizations not having the data population mean overlooking of the outlier records and risking skewing of the analysis that survives the cut.

The problem is not the quality of data rather the loss of data resolution downstream when one blithely filters sparse records. Effect can be similar, and the noise in the whole data set is less a risk than uncompromised data results. Sampling is not bad, but it is important to understand the benefit of removing all restraints if given an option.

Not all this is easy. It is comparatively easier to manage bad data. Data is aggregated from various sources and missing or bad data do not cause misinterpretations. However, when evaluating a particular option, missing data can be problematic. It poses a challenge. Big Data can be quality data’s best friend, or a bystander for quality problems arising elsewhere else.

Working as a writer with Maketick, Inc. Aditi Biswas loves blogging, posting informational content and sharing her views regarding technical updates. In her free time, she is an avid reader and pens her thoughts in verses.