In today’s data-driven world, the potential benefits of artificial intelligence (AI) are vast and varied. However, as AI becomes more prevalent, so does the concern over data protection. In the wrong hands, personal data can be used to manipulate people or even cause harm. So, can AI and data protection be compatible and work collaboratively?

The short answer is, indeed, it’s possible, but there are risks along with benefits that need to be considered. AI relies on utilizing data to learn and make informed decisions, and data protection is crucial for safeguarding individuals’ privacy and preventing data breaches. Striking the right balance between AI and data protection is essential for creating a trustworthy and ethical AI-powered future.

The use of AI must be done ethically, with a focus on transparency and accountability. It is essential to ensure that the algorithms used are unbiased and do not perpetuate existing inequalities or discrimination. Additionally, data protection regulations must be strictly enforced to prevent the misuse of personal data and maintain public trust in AI applications.

In this blog, we’ll encase the risks and potential benefits of combining AI and data protection. We will look at how AI can improve data protection measures, such as detecting and preventing cyber threats, and how data protection can enhance AI transparency and accountability. So, let’s dive into the fascinating and complex relationship between AI and data protection.

Risks Of AI In Data Protection

While the integration of AI and data protection has the potential to create a safer and more efficient digital world, there are also inherent risks associated with this technology. AI-powered systems can be vulnerable to cyber-attacks, data breaches, and privacy violations, all of which can have significant consequences for individuals as well as organizations alike.

- Among the most significant risks of AI in data protection is the potential for algorithmic bias. AI systems learn from the data they are trained on; hence if that data is biased or incomplete, AI may replicate those biases in its decision-making process. This can perpetuate existing inequalities and discrimination, leading to unfair treatment of individuals and groups.

- Another risk is the potential for unauthorized access to personal data. As AI systems collect and assess vast amounts of data, they may inadvertently expose sensitive information to unauthorized individuals or entities. This can result in identity theft, financial fraud, and other forms of cybercrime.

- Additionally, there is the risk that AI-powered systems may be susceptible to hacking or manipulation. Cybercriminals may exploit vulnerabilities in the system and gain access to sensitive data. This is particularly concerning for healthcare organizations, financial institutions, and other industries that handle sensitive data regularly.

Furthermore, there is the risk that AI-powered systems may become too complex to understand fully, making it challenging to identify potential errors or biases. It may lead to a lack of transparency and accountability, reducing public trust in AI systems and data protection measures.

Bias and Discrimination

AI bias and discrimination are among the most pressing concerns when it comes to the integration of artificial intelligence in various industries. Bias and discrimination can occur when AI systems learn from biased or incomplete data and perpetuate these biases in their decision-making processes, leading to unfair treatment of individuals and groups.

AI bias and discrimination can have significant consequences, including perpetuating existing inequalities and reinforcing systemic discrimination. For example, an AI system used in recruitment processes that are trained on data biased against certain groups can lead to a lack of diversity and inclusion in the workplace. This can harm the affected individuals, reduce productivity, and limit innovation.

Additionally, AI bias and discrimination can lead to significant legal and ethical issues. For example, an AI system used in credit scoring that is biased against certain groups can result in discriminatory lending practices, which are illegal and unethical.

The dangers of AI bias and discrimination are further exacerbated in highly sensitive areas, such as healthcare and criminal justice. For instance, a diagnostic AI tool that is biased against certain populations can lead to misdiagnosis, delayed treatment, and, ultimately, harm to the patient’s health. Similarly, a criminal justice AI tool that is biased against certain groups can lead to wrongful convictions, perpetuating systemic injustice and discrimination.

It is essential to understand that AI bias and discrimination are not inherent to AI technology itself but rather a result of the data used to train AI systems. To mitigate the risks of AI bias and discrimination, it is necessary to ensure that AI systems are transparent, explainable, and accountable. Additionally, data used to train AI systems must be diverse, unbiased, and representative of the population the AI system is intended to serve.

Risks Associated With Privacy Violations

The most significant risk of AI and privacy violations is the potential for unauthorized access to personal data, as AI systems may inadvertently expose sensitive information to unauthorized individuals or entities. This can result in identity theft, financial fraud, and other forms of cybercrime.

Another risk is the potential for AI systems to use personal data in ways that individuals did not consent to. For example, an AI system used for advertising that collects personal data may use that data to display targeted ads without individuals’ knowledge or consent. This can result in intrusive and unwanted advertising and potentially harm an individual’s privacy.

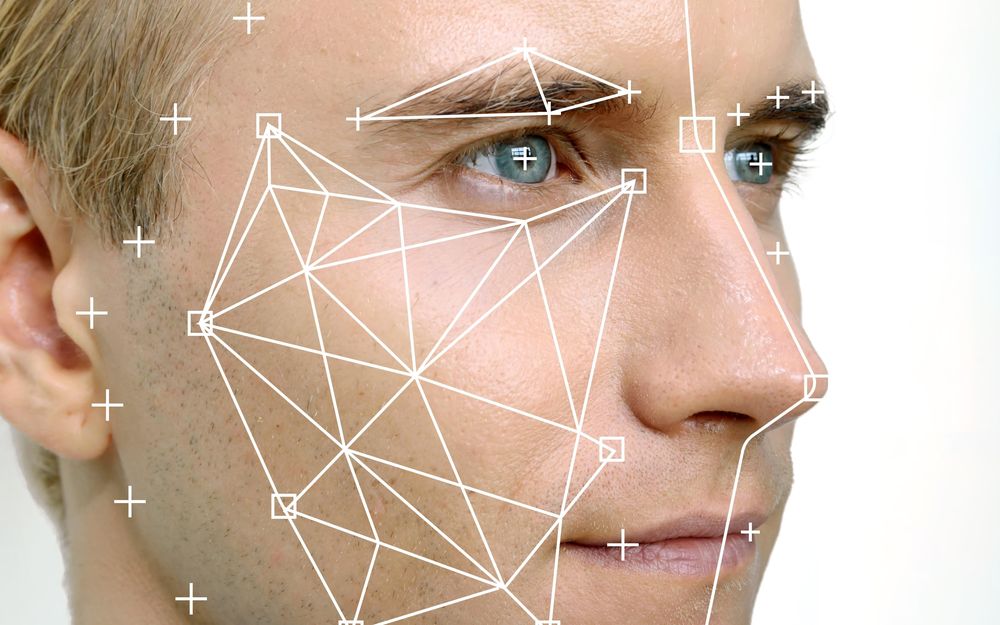

Additionally, AI systems that use facial recognition technology can pose significant risks to privacy. Such systems can be used for surveillance purposes without individuals’ consent or knowledge, potentially violating their privacy rights.

For example, a facial recognition AI system used in public spaces could be used to surveil individuals without their awareness or consent, leading to significant privacy violations.

What Are the Probable Benefits Of AI In Data Protection?

There are several potential benefits of using Artificial Intelligence (AI) in data protection. Let’s look at some of them:

- AI can help to detect and prevent data breaches. With the increasing volume of data generated as well as processed, it can be challenging for human operators to monitor and identify threats in real-time.

- It’s possible to train AI algorithms to detect date patterns that may indicate a breach, such as unusual login patterns or unauthorized access attempts. By identifying these patterns early, companies can take quick action to mitigate the damage and prevent further infiltration. For example, if an employee suddenly logs in from a new device or location, the AI system can flag this as a potential threat and notify the security team.

- AI can be used to enhance data privacy. With data privacy regulations like GDPR & CCPA, companies need to ensure that personal data is adequately protected. AI can assist in this by automatically anonymizing personal data and ensuring that only authorized personnel have access to sensitive information.

- AI can help to improve the efficiency and accuracy of data protection. For example, AI-powered systems can be used to automatically identify and classify data, making it easier for companies to manage and protect their data. Additionally, AI can be leveraged to automate routine data protection tasks such as backups and disaster recovery, freeing up human operators to focus on more complex issues.

- AI can also be used to predict potential threats. By analyzing data from past breaches and identifying common patterns, AI algorithms can predict when and where the next breach may occur. For example, if a company has experienced multiple breaches in the past from a certain type of attack, the AI system can predict that a similar attack may occur in the future and take preventative measures.

- AI can be used to monitor a company’s network for potential threats in real time. By Analyzing network traffic and identifying anomalies, AI algorithms can detect potential threats before they become actual breaches. For example, if the AI system detects a sudden spike in network traffic to a certain server, it can flag this as potentially suspicious and notify the security team.

Best Practices To Ensure AI and Data Protection Work Together To Boost Privacy And Security

When it comes to ensuring that AI and data protection work together to boost privacy and security, there are several best practices that organizations should follow:

- Privacy by Design: Organizations should incorporate privacy into the design of their AI systems from the outset. This means ensuring that data protection principles are baked into the design process rather than bolted on as an afterthought.

- Data Minimization: Organizations should ensure to only collect and use the minimum amount of data necessary for their AI systems to function effectively. This helps to minimize the risk of privacy breaches and reduce the potential impact of any breaches that do occur.

- Regular Auditing: AI systems should be audited regularly to identify potential vulnerabilities. Also, it should be ensured that they comply with relevant data protection laws & regulations. This helps to minimize the risk of data breaches and ensures that organizations are aware of any potential security risks.

- Explainability: AI systems should be transparent, and they should be explainable so that individuals understand how decisions are being made and can challenge those decisions if necessary. This helps to build trust and ensures that individuals’ privacy rights are being respected.

- Regular Training: Organizations should provide regular training to employees who work with AI systems to ensure that they are aware of data protection principles and understand how to use the systems in a way that respects individuals’ privacy rights.

- Continuous Improvement: Organizations should continuously monitor and improve their AI systems to ensure that they are up-to-date with the latest data protection best practices and can adapt to changing privacy and security requirements.

Conclusion

While AI and data protection may seem like opposing forces at first glance, they can work together to boost privacy and security. By incorporating privacy by design, minimizing data collection, regularly auditing systems, ensuring explainability, providing regular training, and continuously improving systems, organizations can ensure that AI is being used ethically that respects individuals’ privacy rights.

Worth Mentioning that the ultimate goal of AI is to help us make better decisions and improve our lives. By carefully balancing the benefits of AI with the need to protect data, we can harness the power of this technology to unlock new insights, drive innovation, and improve our society as a whole.

Besides data security, leveraging endpoint management and security solutions is also a wise choice. These suites of products include solutions for software deployment, patch management, endpoint security, and more.

As AI continues to evolve, we must stay vigilant and ensure that it is being used, respecting our privacy and security. By working together, AI and data protection can help us achieve this goal and build a better future for all.

Probely is the premier cloud-based application security testing solution designed to empower Security and DevOps teams working efficiently together on a DevSecOps approach built to reduce risk across web applications and RESTful APIs.